WHY VIRTUE AI

Powered by Proprietary Technology and Award-Winning Research

Virtue AI is the leading AI-native security platform for enterprises.

Setting the Standard

for Advanced AI Security

The leading AI-native security and compliance platform, reducing enterprise AI risk for agents, models, and apps.

%201.png)

%201.png)

%201.png)

%201.png)

.png)

%201.png)

%201.png)

.png)

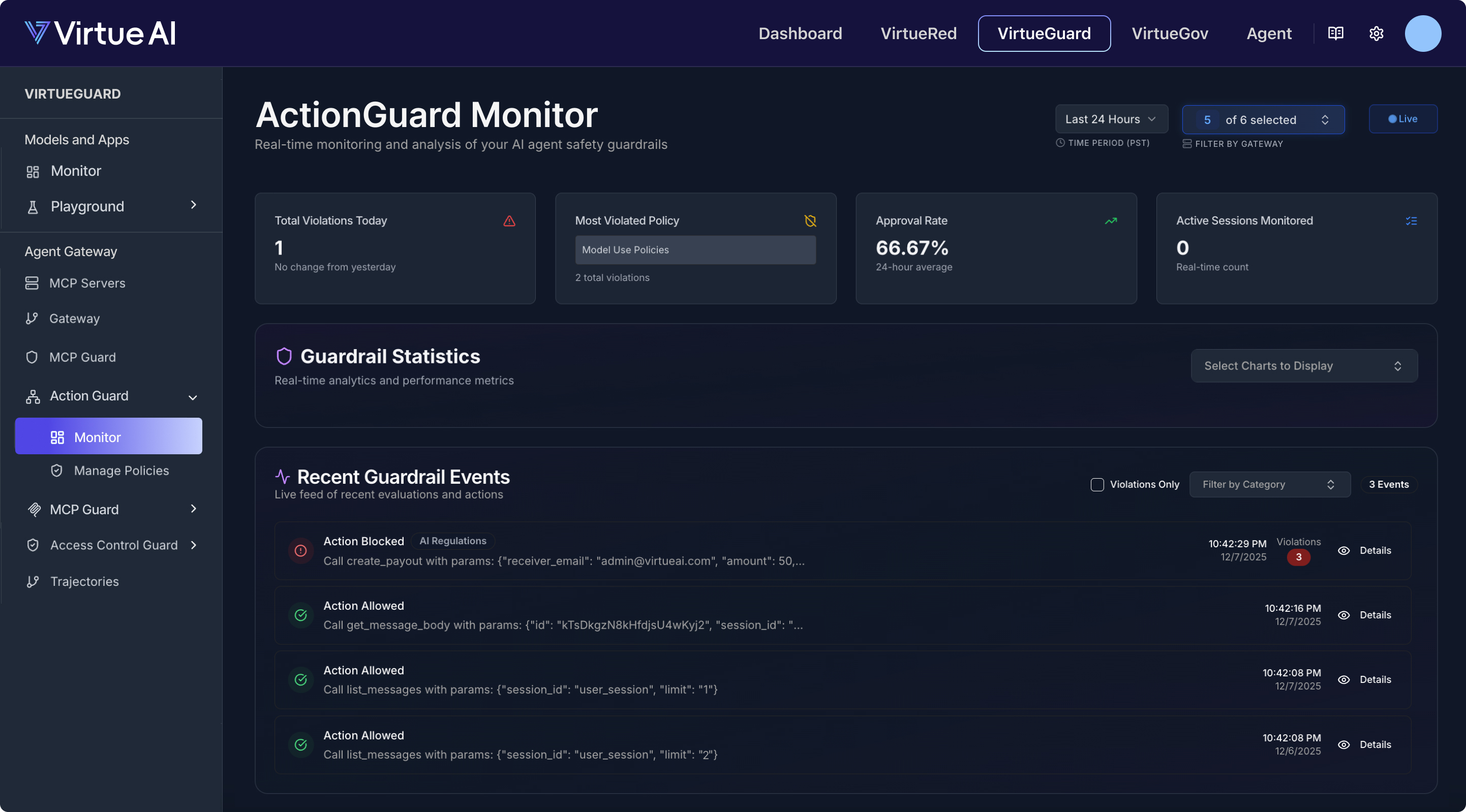

AI-NATIVE SECURITY

Layered, Data-Driven Protection

for Agentic AI

Deploy in minutes, across any environment, to keep your AI agents secure and compliant.

Enterprise Governance for Agentic Systems and AI Applications

Comprehensive, adaptive governance for enterprise AI.

WHY VIRTUE AI

Powered by Proprietary Technology and Award-Winning Research

Virtue AI is the leading AI-native security platform for enterprises.

The PROBLEM

Agents don’t just think, they act: executing code, calling MCP servers, and making decisions in your complex environments.

The VIRTUE AI SOLUTION

AgentSuite provides multi-layered protection on the prompt, action, network, and MCP tool-calling levels.

THE PROBLEM

Enterprises need AI guardrails that reduce risk, ensure compliance, and safeguard corporate reputation.

THE VIRTUE AI SOLUTION

Real-time, multimodal guardrails monitor agentic actions and multimodal inputs/outputs (text, image, audio, video, and code) with sub-10 ms latency and support for 100+ languages.

THE PROBLEM

Traditional red-teaming can only capture limited risks, and it cannot secure a system that evolves and acts autonomously. VirtueRed provides continuous red teaming with: 1000+ risk categories 100+ red teaming algorithms.

THE VIRTUE AI SOLUTION

Continuous, automated red-teaming for models, applications, and agents, covering 1,000+ risk categories, regulatory requirements, and your own company policies with 100+ proprietary red teaming algorithms. Packaged into an authenticated third-party report.

Products

The Virtue AI Platform:

Fast. Scalable. Easy to Deploy.

LIVE: Detecting & Defending

INTEGRATIONS

Integrate with

AI Systems You Already Use

Real-time security, visibility, and governance without disrupting existing workflows.

Lip-Bu Tan

CEO of Intel

Arvind Jain

Founder and CEO of Glean

Kai Wang

Group Product Manager of AI Platform at Uber

Venu Vasudevan

Senior Director of Data Science & AI Research at Procter & Gamble

Zinan Lin

Senior Researcher of Microsoft Research

Vipul Ved Prakash

CEO of Together.AI

Sharad Sundararajan

CEO of Emergence AI

Kai Wang

Group Product Manager of AI Platform at Uber

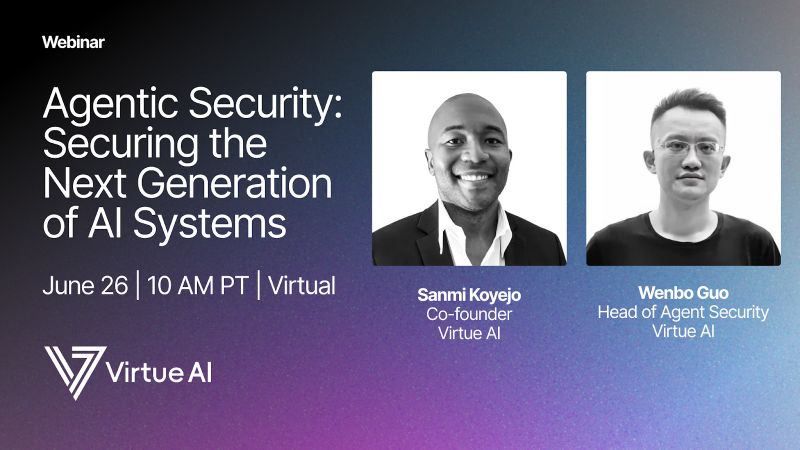

THOUGHT LEADERSHIP

Virtue AI Insights

Award-winning research, real-world case studies, and expert guidance, straight from the team building the future of AI security.

Building Trustworthy AI in Finance: The AllianceBernstein and Virtue AI Case Study

Research Papers

.jpg)

Virtue AI brings control, governance, and resilience to enterprise AI.